Faculty Guidance

Setting Expectations for Programs, Courses and Assignments

On this page:

1.

Syllabus Guide: Including Generative AI Guidance in your Course Syllabus

To help students navigate these tools ethically and effectively, we strongly encourage faculty to include a short, clear statement in their course syllabi about if, how, and when students may use generative AI tools.

You may wish to include:

- Whether AI tools are permitted, restricted, or prohibited.

- The rationale for your decision (especially how it connects to learning outcomes).

- Any limitations on their use (e.g., not during exams, only for brainstorming).

- Expectations for proper attribution or citation of AI-generated content.

- A reminder about privacy concerns when using AI platforms (e.g., not entering personal or sensitive data).

Sample Syllabus Statements for Faculty

The following sample syllabus statements offer flexible options faculty can adapt based on the level of AI integration appropriate for their course. These statements are designed to promote academic integrity while supporting meaningful learning. For practical applications and guidance, see the section titled “Real Life Examples at JIBC.”

- OPTION 1: AI TOOLS NOT PERMITTED

Use of Generative AI Tools is Prohibited

The use of generative AI tools (e.g., ChatGPT, Microsoft Copilot, Gemini) to complete or support any graded assignments or assessments in this course is not permitted unless explicitly stated otherwise. Submitting work generated by or heavily supported by AI tools may be considered academic misconduct under JIBC’s Student Academic Integrity Policy.

- OPTION 2: AI TOOLS PERMITTED WITH CONDITIONS

Limited Use of Generative AI Tools is Permitted

Students are permitted to use generative AI tools for specific purposes in this course, such as brainstorming ideas, summarizing content, or organizing research notes. However, AI-generated text must not be submitted as final work unless specifically approved. All use of AI tools must be acknowledged. Improper use without disclosure may be considered academic misconduct.

- OPTION 3: AI USE OF GENERATIVE AI IS ENCOURAGED

Responsible Use of Generative AI is Encouraged

This course encourages the thoughtful use of generative AI tools (e.g., ChatGPT) as part of the learning process. Students may use these tools to support their understanding, explore new ideas, or draft initial content. However, students are ultimately responsible for the accuracy, originality, and integrity of their work. AI-assisted content must be clearly acknowledged and cited following instructor guidance. For privacy reasons, do not input personal or sensitive information into any AI platform.

2.

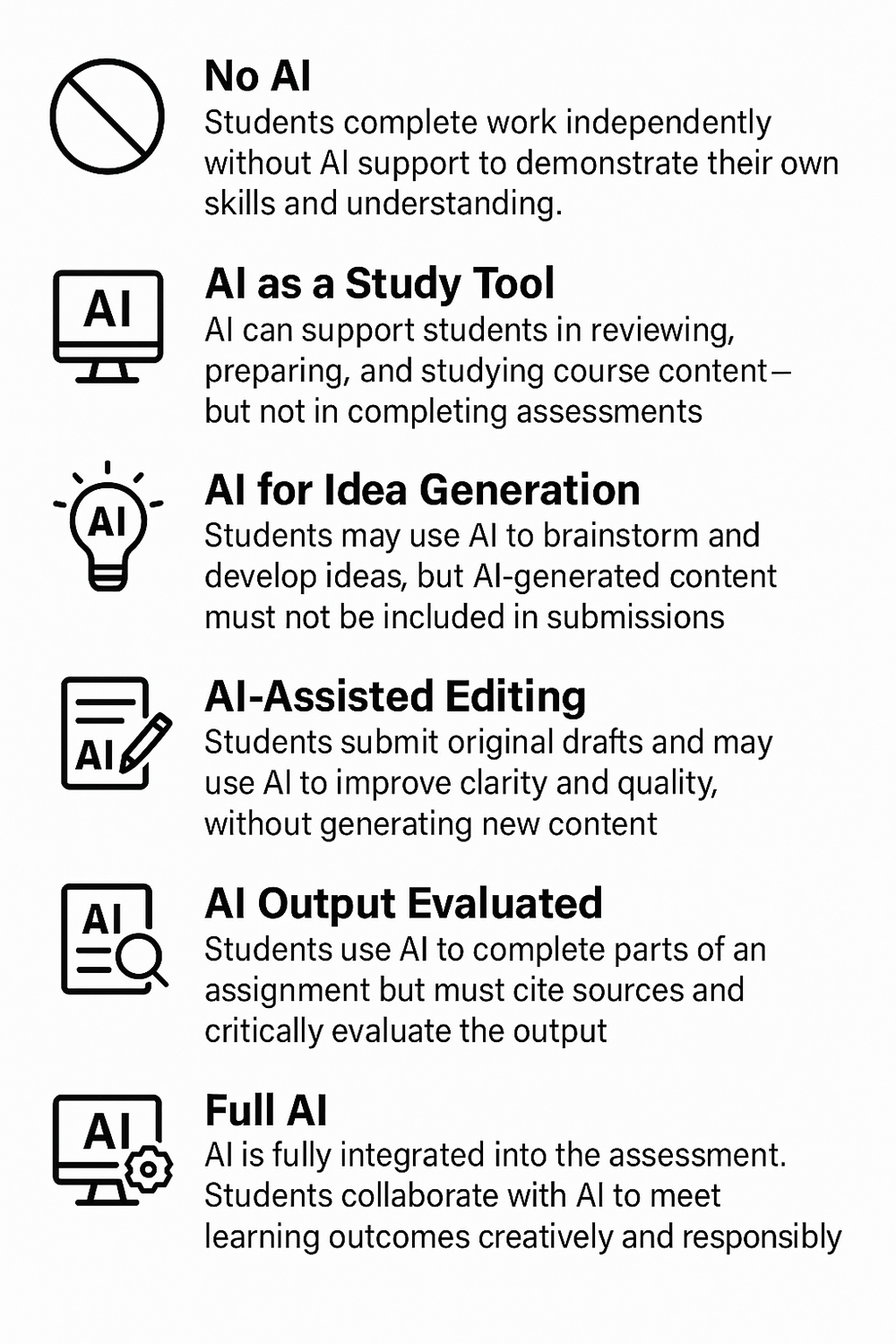

AI Readiness Scale

The AI Readiness scale helps instructors determine appropriate levels of AI use in course. It outlines six levels, from no AI involvement to full integration, ensuring alignment with learning outcomes and academic integrity expectations:

3.

Generative AI: Citing your Use AI

As generative AI (GenAI) tools like ChatGPT become more commonly used in academic settings—for brainstorming, summarizing, drafting, or even data analysis—it’s essential to cite them properly. Transparent citation practices not only uphold academic integrity but also clarify how these tools contributed to your work.

- Acknowledge use: Give credit for ideas or content generated by AI tools.

- Maintain academic integrity: Prevent plagiarism by being clear about what was created with AI assistance.

- Ensure transparency: Help instructors and peers understand how the tool influenced your work.

- Support reproducibility: Enable others to evaluate or replicate your process if needed.

Examples

APA (7th Edition)

In-text citation: (OpenAI, 2025)

Narrative citation: OpenAI (2025)

Reference:

OpenAI. (2025). ChatGPT (May 14 version) [Large language model].

https://chat.openai.com/chat

Example: ChatGPT emphasized the role of fossil fuel consumption in climate change when prompted with “What causes climate change?” (OpenAI, 2025).

Chicago Style

Footnote:

OpenAI’s ChatGPT, response to query from author, May 15, 2025.

Bibliography:

OpenAI’s ChatGPT. Response to “What are the main challenges of climate adaptation?” ChatGPT, OpenAI, May 15, 2025. https://chat.openai.com/chat

MIA Style

Citation:

OpenAI. “ChatGPT.” ChatGPT,

https://chat.openai.com/chat. Accessed 20 May 2025.

IEEE Style

Citation:

[1] ChatGPT, response to author query. OpenAI [Online]. Available:

https://chat.openai.com/chat. (Accessed: May 20, 2025).

4.

Assessment and AI

The emergence of Generative AI (GenAI) is reshaping how we approach assessment in education. With its capacity to assist with complex tasks—from summarizing information to generating thoughtful written responses, it is transforming how we think about assessment design. This presents an exciting opportunity to evolve our practices in ways that uphold academic integrity while enhancing learning outcomes.

Faculty are beginning to reimagine assessments to foster deeper learning. By thoughtfully integrating AI, we can support the development of key competencies such as critical thinking, ethical decision-making, and professional judgment — all essential for success in the high-stakes, real-world contexts our learners are preparing for.

5.

Designing Assessments that Promote Thoughtful Use of GenAI

As generative AI (GenAI) tools become more common in academic settings, assessment design should evolve to foster authentic learning, critical thinking, and ethical engagement with AI. The goal is not to ban its use, but to guide learners in using these tools responsibly and reflectively. Here are four strategies to support meaningful integration:

Encourage learners to develop case analyses or action plans based on real-world or current events. These complex, context-rich tasks promote originality and deeper engagement.

Create opportunities for students to share how they used GenAI in their learning process. This builds ethical awareness and fosters transparency around tool use.

Evaluate drafts, revisions, peer feedback, and reflective components—not just the final product. This highlights learner growth and discourages over-reliance on AI-generated content.

Design assignments that require connections to local policies, community needs, or specific scenarios. This encourages learners to apply AI in ways that are relevant and grounded.